Singapore, Dec. 08, 2025 (GLOBE NEWSWIRE) -- For years, progress in AI was driven by one principle: bigger is better. But the era of simply scaling up compute may be ending. As former OpenAI co-founder Ilya Sutskever recently noted, “the next leap in AI won’t come from a bigger data center, but from a fundamental breakthrough in how machines learn from experience”.

Macaron AI – a startup known for its Personal AI Agent – is betting on this very idea.

Today, Macaron AI is officially launching Mind Lab, its dedicated research arm, and announcing a series of achievements and product updates that validate the vision of Experiential Intelligence.

The Mind Lab team have become the pioneer to run high-performance reinforcement learning on an open-source trillion-parameter AI model using Low-Rank Adaptation (LoRA) – all on only ~10% of the usual GPU budget. In other words, what might have required hundreds or thousands of GPUs before can now be done with only a tenth of it. This efficiency breakthrough not only marks a new chapter for large-scale AI training, but also cements Macaron’s transition from “just an app” into a company with serious AI research chops.

Beyond Scaling Laws: Toward Experiential Intelligence

The industry is recognizing a critical limitation of today’s largest models: despite hitting incredible benchmarks every update, they often stumble on real-world nuance and “long-tail” situations.

Simply throwing more data and parameters at the problem is yielding diminishing returns.

Frontier models can pass bar exams or generate code yet still make basic mistakes – a disconnect sometimes attributed to training on static datasets in narrow settings. The emerging consensus is that truly “intelligent” behavior may require something more: the ability to learn continually from experience.

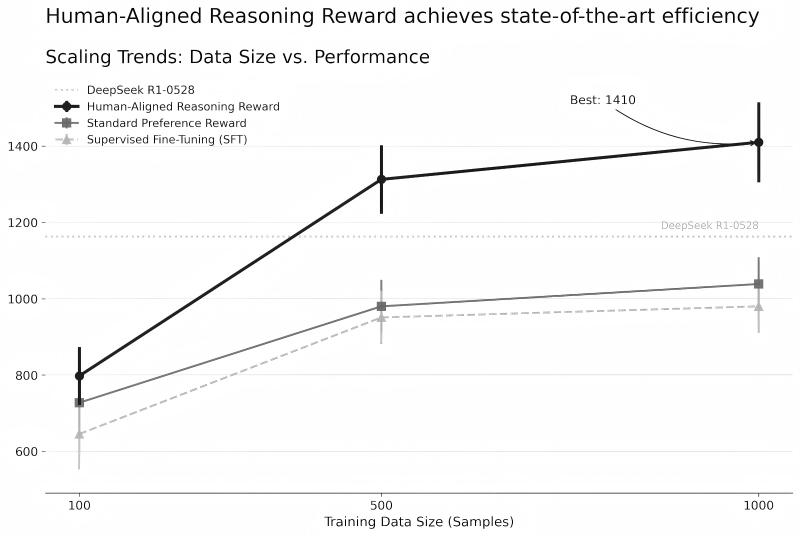

Macaron AI’s philosophy aligns squarely with this view. The company advocates experience-driven intelligence – moving beyond blind adherence to scaling laws and instead closing the loop between real-world usage and model learning. Rather than re-training giant models from scratch for marginal gains, Macaron’s approach is to start with powerful foundation models and iteratively refine them through real interactions, feedback, and tasks. “Real intelligence learns from real experience” is the ethos guiding Mind Lab’s work, in contrast to competitors locked in a pre-training arms race. By focusing on Research–Product co-design, the team leverages data from its actual product (the Macaron personal agent) to inform research improvements, and vice versa. They claim to have demonstrated empirically that training on real user feedback can yield larger performance boosts than simply adding more pre-training data – a shift they dub the rise of “Experiential Intelligence.”

This vision builds on the idea that an AI should evolve more like a human: continually updating itself through real interaction with users. In fact, Macaron itself debuted as a Personal AI Agent. Unlike productivity bots that churn through office tasks, it learns your tastes, anticipates your goals, and analyzes conversations in real time. It creates personalized apps on the fly to enrich everyday routines.

Macaron aims to be the true Personal AI Agent of the experience-driven era, and Mind Lab is the engine making it possible.

Mind Lab: Research Team Behind the Personal Agent: Macaron AI

Today’s announcement formally introduces Mind Lab as the core research arm behind Macaron AI. While Macaron’s app has been in the spotlight for its friendly interface and clever mini-app generation, Mind Lab has quietly been solving the massive technical challenges that power that experience. The lab consists of a 10-person all-star research team with deep roots in AI – including researchers hailing from organizations like OpenAI and DeepMind, and alumni of top universities such as Tsinghua, MIT, and Cornell. Collectively, the team has co-authored over 200 papers (with 30,000+ citations) in areas spanning reinforcement learning, large-scale optimization, and AI systems. This pedigree is not just for show: it underpins Macaron’s technical defensibility. The company wants to be seen as having a “frontier research stack” under the hood of its consumer product. In practical terms, that means breakthroughs developed by Mind Lab feed directly into making Macaron AI more capable and efficient than competitors that rely only on off-the-shelf models.

In a front-end layout task, reinforcement learning on human preferences—rather than model scores—led to visibly better outputs, showing how real feedback can meaningfully refine system behavior.

Crucially, Mind Lab’s mission isn’t to win the parameter count race, but to chart a different path to AI intelligence. “We don’t blindly scale up, we scale smarter,” says Andrew, Macaron’s founder and head of research (himself an MIT-trained AI researcher). The lab’s focus is on algorithms that allow AI agents to learn from interactive experiences – whether that’s feedback from users, environmental exploration, or solving downstream tasks – thereby improving in a way static training can’t. By explicitly building reinforcement learning and continual adaptation into the training loop, Mind Lab aims to capture the messy, dynamic aspects of the real world that large static corpora miss. In short, Mind Lab acts as the experimental brain trust turning the concept of experiential intelligence into tangible techniques. With its official debut and the results unveiled today, Macaron is signaling to the industry (and to potential hires and investors) that it is not just another app wrapping upon other LLM APIs, but a full-stack AI innovator in its own right.

Check out Macaron here: https://macaron.im/

Trillion-Parameter Reinforcement Learning – at 10× Efficiency

The centerpiece of Mind Lab’s announcement is a technical first: achieving high-performance reinforcement learning (RL) on the largest open-source 1-trillion-parameter model, using a parameter-efficient fine-tuning method called LoRA (Low-Rank Adaptation). Pushing RL to this scale is a monumental feat – ordinarily, tuning a model with hundreds of billions (let alone a trillion) parameters via RL would be prohibitively expensive, often considered to require “thousand-GPU-class” compute. Macaron’s team, however, found a way to do it with roughly an order of magnitude less resources. Compared to conventional approaches, their LoRA-based RL pipeline uses only about 10% of the GPU compute that one might expect. In a recent technical write-up, the team described slashing the time per RL training iteration by over 6× through a synchronized rollout and training architecture. The result: they achieved the desired model alignment and performance at roughly 10% of the usual training cost.

This breakthrough effectively solves a cost problem that has loomed over large-model alignment. By combining hybrid parallelism strategies (spanning data, tensor, pipeline, and expert parallelism) with LoRA fine-tuning, Mind Lab’s system can train and adapt truly colossal models without “breaking the bank”. For context, earlier this year Macaron set a benchmark by training a 671B-parameter model with just 48 H100 GPUs – already a remarkable efficiency gain. Now, with 1T-parameter RL training demonstrated, they’ve leapt even further. It set an industry standard by successfully running RL at this scale on an open model with a LoRA approach. The choice of LoRA is key: instead of updating all trillion weights, LoRA inserts tiny low-rank update matrices (often affecting <0.5% of the parameters) to adapt the model. This dramatically lowers the compute and memory overhead for training, at minimal cost to accuracy – a low-rank tweak can retain over 90% of the performance of full fine-tuning while using a fraction of the compute.

We applied our system to RL on Kimi K2 for a set of long-horizon reasoning and agent tasks.

Key observations

- 10× GPU Efficiency: LoRA-based reinforcement learning on Kimi K2 achieves the same alignment quality with just 10% of the GPU footprint required for full-parameter training—making trillion-scale RL both tractable and cost-effective.

- Stable learning curves: Training runs exhibit smooth, reliable learning curves, with steadily increasing rewards and task success rates—free from instability or catastrophic collapse.

- Generalization Intact: Despite targeted adaptation, downstream evaluations on unseen benchmarks confirm that the model retains its broad general-purpose capabilities while gaining sharper task alignment.

Together, these findings demonstrate that trillion-parameter LoRA RL is not only feasible, but operationally practical—especially when architected from the ground up with MoE parallelism in mind.

Moreover, Mind Lab open-sourced the core RL algorithm and contributed their optimizations to major AI frameworks. Their techniques have been merged into NVIDIA’s NeMo Megatron-Bridge (NVIDIA’s platform for training giant models) and into ByteDance’s VolcEngine RL (VERL) library, which are popular infrastructures for large-scale AI research. This means any organization using those frameworks can now leverage Macaron’s method for LoRA-based RL at scale. The community response has been enthusiastic – maintainers of these projects have recognized the contribution, and early adopters in open-source circles are taking note. By integrating with established platforms, Mind Lab isn’t just running an impressive demo in-house; it’s elevating the state-of-the-art for everyone. In an arena where proprietary giants guard their techniques, such openness can also be seen as a strategic move to attract top-tier talent and collaborators who value impact on the wider AI ecosystem.

Read the full technical blog here: How we build trillion parameter reasoning RL with 10% GPUs

Rethinking AI Memory: “Memory Diffusion” and Wise Forgetting

Macaron AI’s Memory Diffusion reframes how an AI stores and updates information. Instead of treating memory as an external database or a simple replay of past conversations, Macaron continually re-compresses its memory over the agent’s trajectory. In practice, this means the AI’s long-term context is not a static log of everything said, but an evolving, distilled state that gets refined with each interaction. As new experiences come in, older details aren’t just accumulated or dropped – they’re compressed and integrated into a compact representation of what truly matters. This dynamic approach allows Macaron to carry knowledge forward without the baggage of irrelevant data, maintaining a rich personal context for the user while staying computationally efficient.

At the heart of Memory Diffusion is a three-step cycle often described as Mask–Allocate–Refill. In each cycle, the model performs the following steps to refresh its memory store:

- Mask: The system identifies and masks out segments of the internal memory that are deemed low-value or stale. By temporarily removing these less relevant details from active memory, Macaron creates “space” to reprocess information that might need refinement. This is akin to pinpointing which parts of the memory to reconsider or compress further.

- Allocate: Next, Macaron intelligently allocates its fixed memory budget to different pieces of information based on their estimated importance. In simple terms, the model decides how many “tokens” (the chunks of text or data) each memory should get. Critical, decision-relevant memories are given a larger share of the context window, while trivial or redundant details get a smaller allotment (or none at all). This step ensures that important information has enough room for detailed recall, whereas unimportant data won’t clutter the memory.

- Refill: Finally, the model refills the masked-out slots by regenerating a condensed version of the important information. Using its language model capabilities, Macaron produces fresh, summarized memory chunks that fit the allocated token budgets. In essence, it writes a shorter, salient “memo” for those parts of the past, effectively compressing them before re-inserting into the memory. After refilling, the memory is once again a complete timeline of information – only now in a more compact, value-dense form.

This Mask–Allocate–Refill cycle implements a form of intelligent forgetting. The system purposefully loses the noise while keeping the signal: low-value context gets pruned or heavily compressed, whereas decision-critical facts are preserved with higher fidelity. It’s inspired by the way humans naturally forget trivial details. For example, when driving to work you might notice dozens of billboards and license plates, only to forget them minutes later. Your brain sheds those irrelevant details in order to remember what’s important (like the route or a sudden road hazard). Likewise, Macaron’s memory engine continuously filters out or abstracts away the clutter (say, an old casual remark) while retaining the core information that could influence its decisions or the user’s needs. By selectively forgetting the fluff, the AI can focus better – improving both efficiency and the relevance of its responses.

This mechanism gives Macaron a form of intelligent forgetting akin to human memory. Crucial experiences and high-value information are preserved with high fidelity, while trivial or redundant details gradually fade into abstracted summaries or are dropped entirely. By continuously triaging and compressing its memory stream, Macaron sustains coherent long-horizon reasoning without a bloated context window – the cost of recalling its past stays effectively constant (O(1) complexity) regardless of the conversation’s length. In essence, Memory Diffusion enables Macaron to remember what truly matters (much like a person recalling a meaningful conversation) and gracefully let go of the rest, the way you might forget a passing billboard on a long drive.

Read the full technical blog here: Exploring Agentic Memory Beyond Reasoning and Tool-Use

From Lab to Product: A Faster and Smarter AI

Impressively, these research advances are already bearing fruit in the product itself. Alongside Mind Lab’s debut, the company is rolling out a suite of major upgrades to Macaron AI:

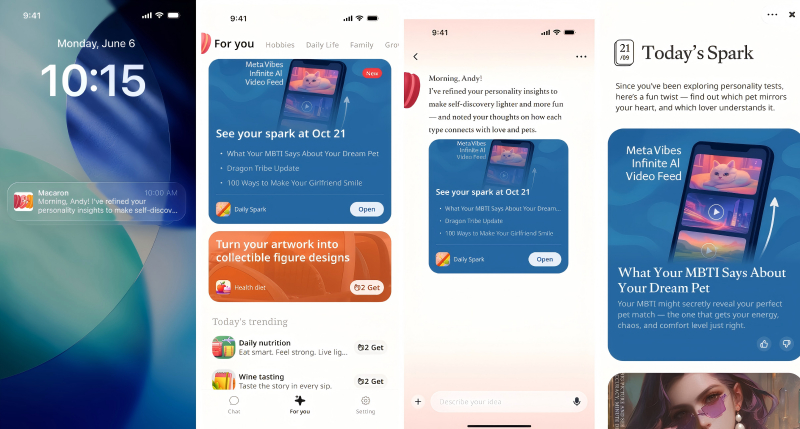

- 10× Faster App Generation: One of Macaron’s signature capabilities is to create customized apps (tiny apps for tasks like habit tracking, trip planning, etc.) on demand from a user’s request. This process has gotten dramatically faster. What used to take around 20 minutes now often completes in 2 minutes or less – a 90% reduction in speed. The speed-up comes directly from model-level optimization: the more efficient reinforcement learning and fine-tuning pipeline means Macaron’s large model can compile and deploy code much more quickly, not through any shortcut or caching. For users, this is immediately noticeable. Ask Macaron to build an app – say, a personalized workout planner – and it will go from idea to interactive tool almost in the blink of an eye. The faster turnaround not only improves user experience but also enables more complex or iterative app-building flows that wouldn’t have been practical before.

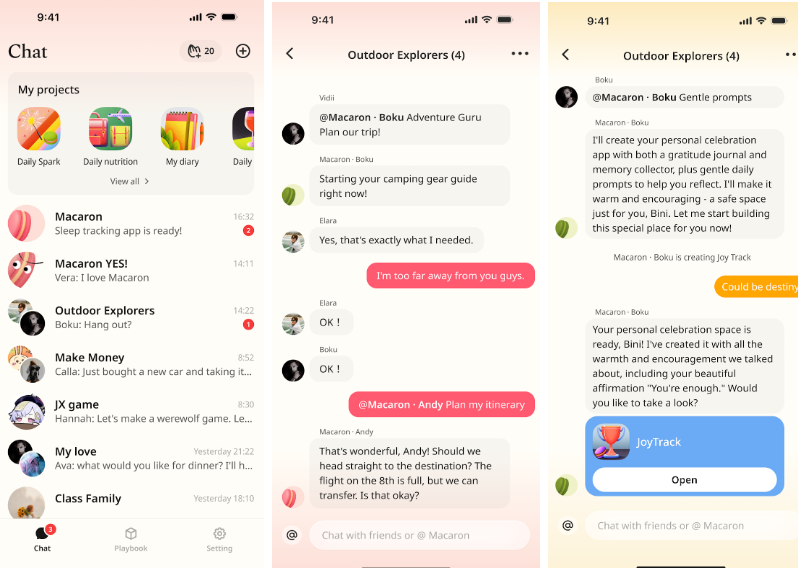

- Social Collaboration (Group AI Chat): Macaron is moving beyond the one-on-one chatbot paradigm. The latest update introduces multi-user group chats, where you can invite friends, family, or colleagues into an AI-powered conversation. In these shared spaces, Macaron acts like a facilitator and creative partner for the whole group. For example, a team can brainstorm with the AI in the loop, bouncing ideas off both humans and the AI assistant. Friends can share a funny AI-generated story and riff on it together. Study groups can jointly query Macaron for research assistance. This collaborative angle – multiple people engaging with one AI agent – transforms the experience from a solo interaction into a shared creative session. It effectively turns Macaron into a “group member” that can participate in discussions. By making AI a collective experience, Macaron aims to deepen its usefulness (and enjoyment) as a tool that fits naturally into how people already work and socialize together. This feature positions Macaron less as a private assistant and more as a collaborative AI workspace.

- “Daily Spark” Personalized Feed: Also launching is Daily Spark, a kind of AI-curated daily digest unique to each user. Unlike generic news feeds that just bombard you with headlines, Daily Spark takes into account your past interactions, stated interests, and even your mood. The result is a short, “memory-aware” feed designed to inspire and inform without overwhelming. One day your Spark might include a gentle motivational quote or a reflective micro-essay based on something you chatted about earlier (for example, a follow-up on your goal to start meditating), plus a quick tip on a hobby you enjoy, a summary of a news item you’d likely care about, and perhaps a book or movie recommendation tailored to your tastes. Another day it might lean more practical – a reminder of an upcoming personal event and a suggestion from Macaron on how to prepare. The content ranges from poetry and philosophical musings to wellness tips and niche news briefs. The key is contextual relevance: it feels like a friend who knows you compiled a mini newsletter just for you. Macaron’s team contrasts this with traditional AI news digests which are one-size-fits-all; Daily Spark is meant to be “warm, contextual, and intuitively relevant,” not a firehose of random information.

- Unified Memory Across Chats and Apps: In a bid to make the AI truly feel like a cohesive assistant, Macaron has unified the memory behind its free-form chat and its mini-apps. Practically, this means information is shared seamlessly between the two. If you log your meals or workouts in a Macaron-generated tracker app, you can immediately reference that data in a conversation – “How many calories did I consume this week?” or “Show me my progress over the last month.” Conversely, if you discuss something in the chat (say you mention an upcoming anniversary or a goal to read more books), Macaron can inject that context into a relevant app or remind you later via the appropriate tool. Previously, these were somewhat siloed: the chat had its memory, and each app had its own data. Now it’s all one connected knowledge base about you (with appropriate privacy safeguards). This bridging of contexts makes Macaron feel far more integrated – less like a bunch of separate AI tricks, and more like one continuous, evolving personal agent that accompanies you through different modes of interaction.

Each of these upgrades stems from the fundamental work Mind Lab has done on large-model efficiency and long-term learning. Faster model inference and fine-tuning yields faster app generation. Better memory algorithms yield a more personalized feed and coherent cross-session understanding. Scalable training makes features like group AI interactions possible without the system breaking. It’s a textbook example of research driving product innovation in real time. As Macaron rolls these features out (just in time for the end-of-year user growth push), it strengthens the product’s appeal both to tech-savvy users and general consumers – the former appreciate the under-the-hood prowess, the latter enjoy the smoother, smarter experience without needing to know why it’s better.

The Road Ahead: Experience-Driven AI Comes of Age

With Mind Lab’s debut, Macaron AI is positioning itself at the forefront of a new wave in AI – one where continual learning from real experience takes center stage. The high-profile reinforcement learning result (trillion-scale RL at 10× efficiency) is a proof point that this young company can punch above its weight in fundamental AI research. By sharing these advances openly, integrating with platforms from the likes of NVIDIA and ByteDance, Macaron is also plugging into the broader AI community in a credible way. This helps attract top talent who want to work on hard problems with real impact, and it signals to investors and industry observers that Macaron owns its technology stack. It’s not just packaging someone else’s large language model; it’s inventing new ways to make AI learn and adapt.

Perhaps most importantly, today’s news underlines a broader narrative: the AI industry may be stepping out of the era of mindless scaling and into an era of “Experiential Intelligence.” Macaron AI’s bet is that an AI which learns from the continual feedback of living, breathing users will ultimately outsmart one that merely trained on terabytes of text. That philosophy is now embodied in Mind Lab and exemplified by the breakthroughs it’s showcasing. If the rest of the industry follows suit, we could soon see AI systems that grow more useful the longer they run, much like a human gaining wisdom with experience. For now, Macaron is one of the pioneers of this shift – melding cutting-edge research with product design to usher in the era of Experience AI. Users of Macaron’s personal agent likely won’t need to know terms like LoRA or memory diffusion, but they may notice that their AI companion just keeps getting better the more they use it. And that, in the end, is what experiential intelligence is all about.