HongKong, China, Dec. 31, 2025 (GLOBE NEWSWIRE) -- For years, 3D pipelines have lived with an awkward compromise: geometry defines the shape, and textures are something you slap on afterward. It’s efficient, and it works — until it doesn’t.

The cracks show up the moment a model needs to be more than a pretty render. When you move into 3D printing, manufacturing, or any high-fidelity output, that classic “build the mesh first, then project details onto it” workflow can turn into a game of whack-a-mole: stretched textures, mismatched seams, missing detail in occluded areas, and hours of manual cleanup before anything is safe to send to a printer.

Hitem3D says its newly released Hitem3D 2.0 is built to attack that split head-on. The headline feature is integrated texture generation at 1536³ Pro resolution, designed to make textures feel less like a layer painted onto a surface and more like something that’s generated as part of the object itself — tied to the geometry, scale, and material logic from the start.

“We weren’t trying to make models look more ‘flashy,’” a Hitem3D technical lead says. “We’re focused on making the structure, texture, and production logic more coherent — because if generation isn’t solid, printing and mass production turns into rework.”

From “pasted on” to “grown in”

A lot of the current wave of AI 3D tools still inherits a familiar problem: the output can look impressive from the front, but it’s fragile once you rotate it, inspect it up close, or try to push it into a real production workflow. In practice, the “AI magic” often ends up creating a new kind of technical debt — you gain speed upfront, then pay it back later with fixes.

Hitem3D’s pitch is that the solution isn’t just “higher resolution textures,” but a different generation logic: a structure-aware integrated texture generation approach that treats texture as something that should be consistent with geometry, not merely aligned to it.

dragon-shaped asset where scales, horns, and whiskers follow the underlying geometry rather than appearing as a flat overlay. Hitem3D claims the detail holds even as an untextured mesh, with volume and layering that remain intact before finishing, making the asset more directly usable for printing workflows.

Why this matters for printing (and not just visuals)

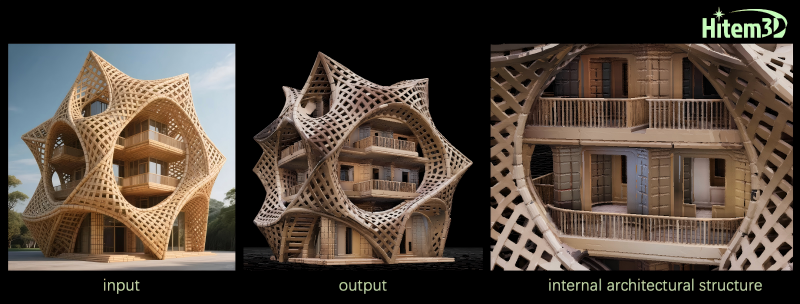

The gap between “looks good” and “production-ready” is often found in the overlooked details: the underside of a seated figure, the interior folds of a cloak, or the complex internal cavities of an architectural model. For 3D printing professionals, these are the high-stakes areas where traditional textures often fail, leading to costly print failures.

Hitem3D 2.0 leans heavily on that reality. Instead of relying on the usual multi-view projection approach, the company says it generates texture information during geometry reconstruction — aiming for consistent completion across both visible and occluded regions.

In one architectural example described by a 3D printing studio operator, the model’s interior walls, the inner faces of openwork structures, and balcony railings were filled in with consistent material and texture logic — the kind of stuff that often collapses into blanks until you discover it in print.

“Before, a model could look fine from the front,” the operator notes, “but printing exposed the issues: empty bottoms, missing interior surfaces. Now the completion happens during generation, and we can send it to print with far fewer fixes.”

The technical spine: constraints, lighting, and material truth

Hitem3D says the engine behind 2.0 is trained on a large base of 3D asset data to learn spatial continuity and surface logic in three dimensions — so the system can do reconstruction under structural constraints rather than “guess and paste.”

Practically, that’s meant to reduce the classic projection failures: stretching, misalignment, distortion, and that uncanny “texture sliding over the mesh” look that instantly gives away AI outputs.

Hitem3D is also targeting a different, quieter killer: lighting.

If you’ve ever tried to extract clean material information from source imagery, you know the trap — highlights and shadows get baked into what’s supposed to be base color and material response, and suddenly your asset has an inconsistent material response under standard PBR lighting. Hitem3D 2.0 introduces illumination-aware semantic recognition paired with Physically Based Accuracy, meant to delighting (shadow, specular, ambient influence) from the underlying surface properties. The idea is to create a cleaner, more uniform base for downstream PBR workflows — and less of that “looks correct only in the lighting that created it” problem.

A second demo example — a wooden crate — is presented as a case where material logic matters more than raw sharpness: wood grain orientation and plank seams are intrinsically aligned with the object's geometry. Furthermore, surface wear is intelligently distributed—concentrating on high-contact edges and base surfaces—resulting in a model that reflects authentic usage patterns rather than arbitrary procedural noise.

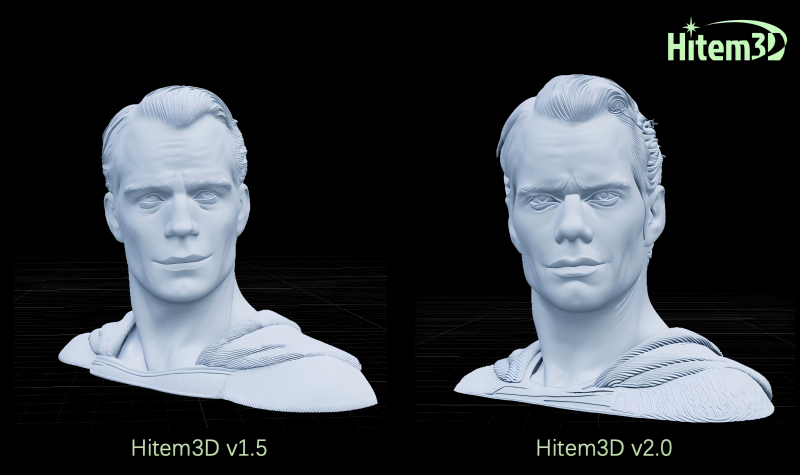

Portraits, but at “hair level”

If there’s one category that reliably breaks 3D generation systems, it’s humans — and hair, in particular, tends to turn into either a clumpy helmet or a mess of intersecting geometry.

Hitem3D 2.0 adds a portrait mode described as “strand-level fidelity,” aiming to reconstruct head shape and facial proportions structurally while preserving fine detail like hair flow direction, brows, and lashes, plus cleaner transitions between skin and hair material regions.

A long-time 3D modeling user described the difference in practical terms: clearer curl layers, less chaos, fewer interpenetration issues, and less manual cleanup time on face and hair details.

Production features: segmentation, retopo, embossing, and “printing-ready”

On the production side, Hitem3D is positioning 2.0 as something closer to an end-to-end system than a one-off generator:

- semantic 3D model segmentation so the system understands parts, not just surfaces

- automatic retopology and AI 3D embossing features for manufacturing-oriented workflows

- export and workflow support aimed at moving assets from generation into toolchains without weeks of repair

And importantly: Hitem3D highlights automated export for multi-material printing-ready (4 materials) assets — with the note that the same concept extends to 8 or 16 materials. That’s a meaningful distinction from “full-color printing” in the casual sense: the point here is that the model is split and structured in a way that can actually map to a multi-material printer pipeline without hours of manual mesh surgery.

Hitem3D also says it supports USDZ, and a workflow that uses geometry + reference images to generate textures — positioning it as a way to “revive” existing models, not just create new ones. A deeply integrated Blender add-on is framed as the practical bridge: less context switching, more “native” insertion into creator workflows.

The part that actually matters: usability, not spectacle

It’s easy for AI 3D announcements to devolve into a familiar loop — bigger numbers, flashier renders, vague claims about “realism.” Hitem3D’s argument is that the next phase of 3D generation isn’t just about speed, but about whether the output can withstand the rigors of industrial workflows—from printing tolerances and structural integrity to material consistency and reduced rework rates.

If Hitem3D 2.0 delivers on this promise—providing integrated generation that holds up structurally and operationally—it represents a pivotal transition. It moves AI-generated assets from the realm of "cool demos" into a reliable, trust-based framework for B2B production.

The real test, as always, is what happens when users push it beyond the cherry-picked examples: thin features, complex joints, extreme occlusion, and the messy variety of real-world assets. But the direction is clear: 3D generation is trying to grow up from “content acceleration” into a repeatable production capability — and Hitem3D wants to be one of the systems building that bridge.